Everything You Need to Know About How Slack Approaches Accessibility Testing

Updated: May 1, 2020

As your organization starts to scale, how do you ensure accessibility is still a priority?

In the webinar, “How Slack Approaches Accessibility Testing”, three engineers at Slack, Sharanya Viswanath, Kirstyn Torio, and Kristina Rudzinskaya, discuss how they approach and scale accessibility at Slack.

This blog post will provide organizations with actionable tips on how to grow an accessibility team and approach accessibility in the development process.

Approaching Accessibility at Slack

Slack is a communication platform that brings all of your organization’s tools together in one place. It allows teams to share information, files, and more all in one workspace.

Engineers at Slack wanted to deliver the most delightful, inclusive experience to their users. They learned that the earlier accessibility was built into the development process, the more people would be able to use Slack. They focused on not just fixing bugs but improving the overall user experience.

In the early stages, team members didn’t know what it took to build an accessible product. Values alone weren’t enough to kick things off – they also need expertise.

The accessibility team was created three years ago to proactively build foundations and accessibility-specific features, however, they still had a problem – they couldn’t scale accessibility efforts because everyone should own accessibility.

A strategy called “Everyone Does Accessibility” was created to help team members answer the following questions.

- How do we increase a11y (accessibility) awareness?

- How do we fit accessibility into the existing process?

- How do we provide thorough coverage?

Tactically, the strategy helps each role involved in software development with a simplified answer to the question, “what’s in it for me?” This is specifically important for quality engineers since they are closer than anyone else to the end-users.

Quality Culture at Slack

In order to achieve accessibility, the team of engineers needed to address a few problems.

- Limited understanding of accessibility

- Fitting accessibility into the existing process

- Providing thorough coverage for all accessibility features

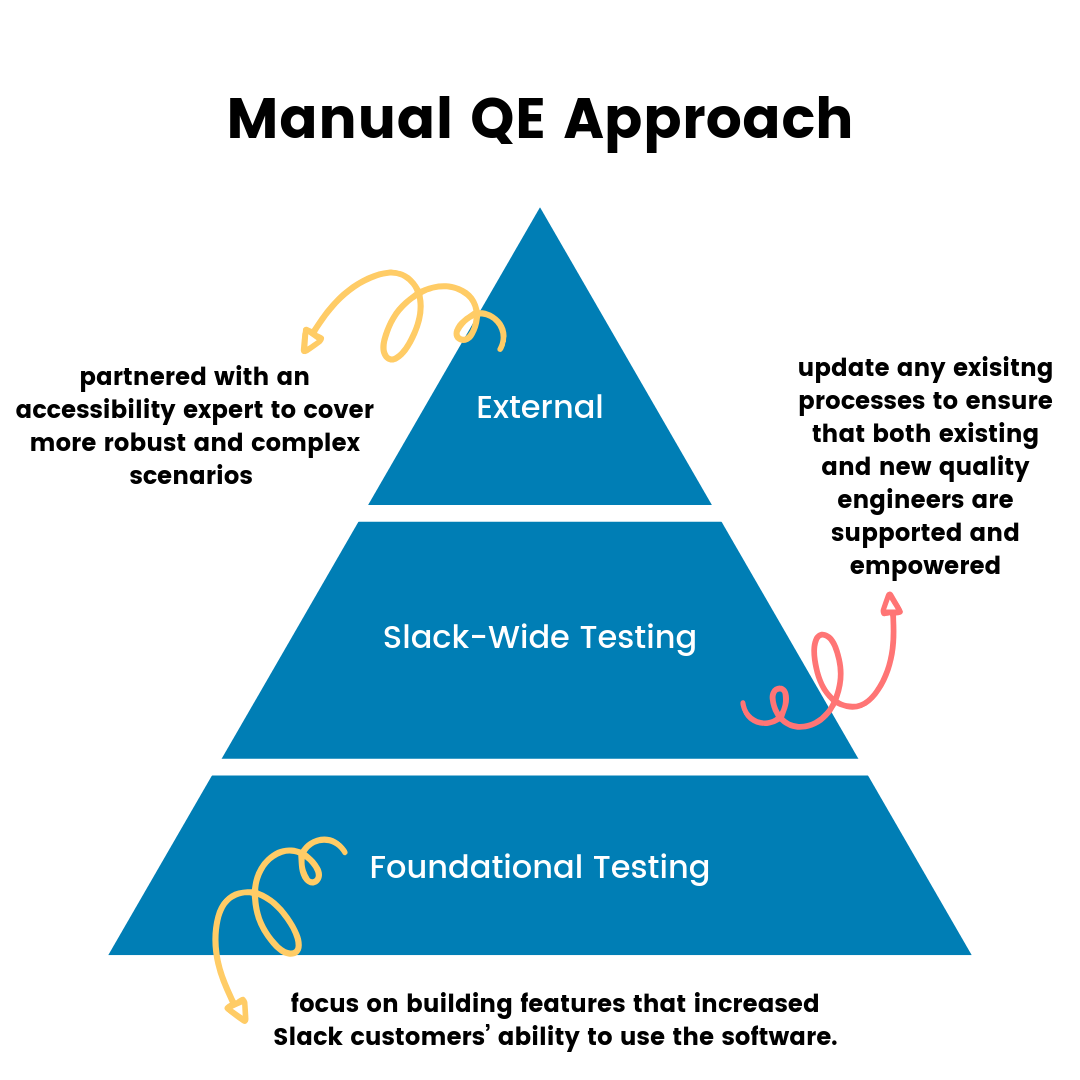

First, engineers at Slack went through a manual approach to the quality-testing process represented by this pyramid.

Scaling A11y Testing

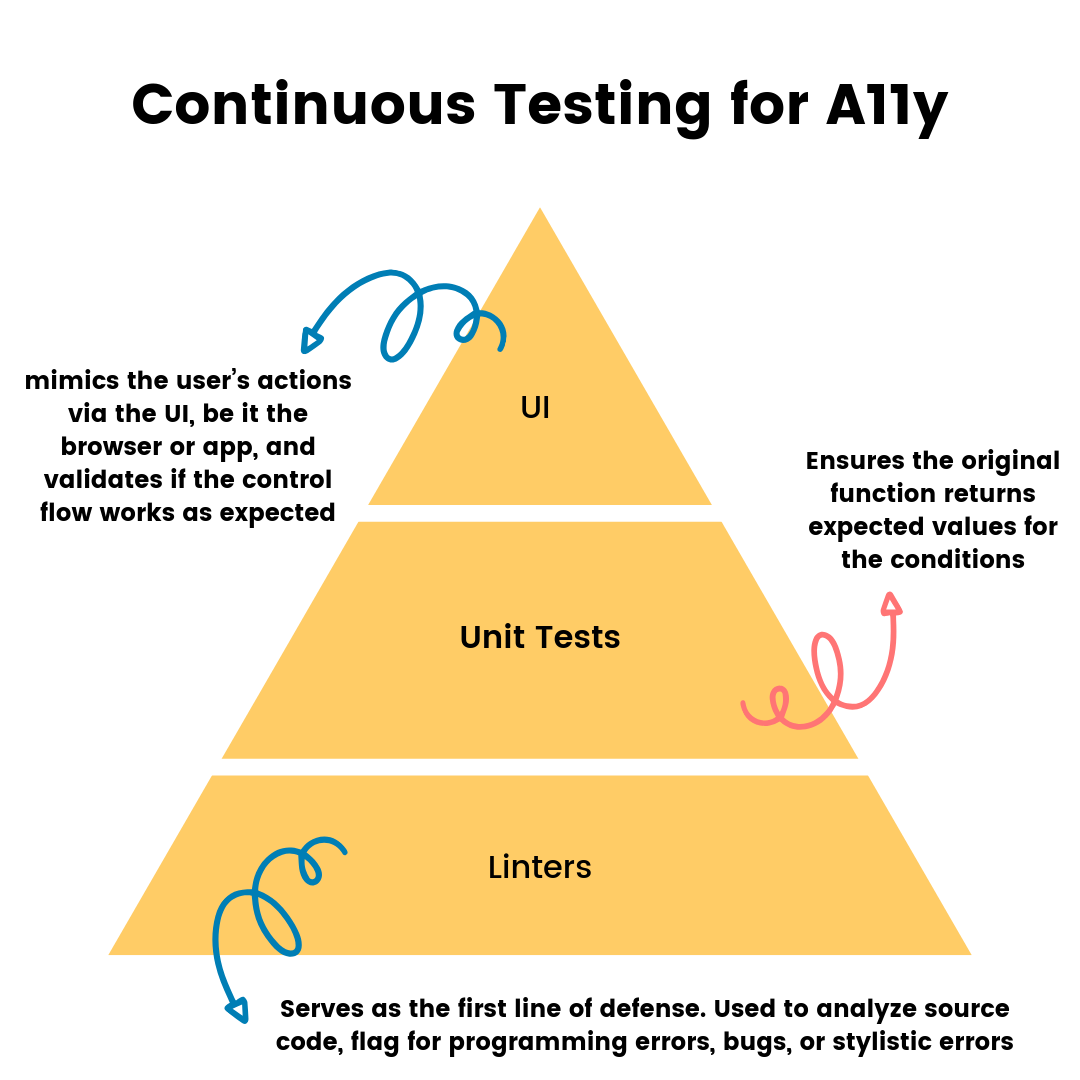

Engineers at Slack develop, test, and manage quality around the development schedule. How is this done? With automated tests, of course!

They strive to adhere to the test pyramid and have modeled it for testing accessibility to rely on linters, unit tests, and UI tests. They’ve compartmentalized these checks before and after code gets shipped to production. Pre-merge checks are heavily invested in to minimize regression.

Pre-merge checks include:

- Static linters

- Unit tests

- Some functional tests

On the other hand, post-merge checks include:

- More heavy functional tests

With the pre-merge and post-merge checks, it’s important to understand how early Slack thinks about automation, and how it flows along with their process to assure quality.

Before development, there are a few steps the engineers take. First, the team meets. Then, product and engineering start thinking about a project, and members of quality and automation engineering are involved in the kickoff meetings when ideas are fully baked and approved by the internal council.

Post-kickoff, quality engineering comes up with a test plan and partners with development to identify the pieces that can be automated via unit or end-to-end tests. Then, prioritized work is tracked on Slack’s project management tool to ensure everything is done before the feature gets shipped to users.

Both development and quality engineering write and maintain tests. It’s possible that sometimes these tests may not run properly and can be flaky. A flaky test is a test that when it fails, there are no changes detected in the source code.

When a test is developed, there’s an opportunity to run it several times in a stability test job. Once the test has 100% stability, it is introduced in the automation test suite as a pre-merge, or pre-blocking test.

It’s imperative to have structures in place to fix flaky tests depending on test priority, severity, and also the stability rate.

After development, the regression automated tests that catch bugs post-merge triages the test failure to follow up with appropriate engineers in a fix forward, revert, or having the logic behind a feature flag to allow for further investigation and thorough testing.

Slack believes that they’ll be able to scale this strategy through their values and have more success stories to celebrate. Most importantly, they’ll have way more delighted users.

Watch the full webinar! 👇

Further Reading

Subscribe to the Blog Digest

Sign up to receive our blog digest and other information on this topic. You can unsubscribe anytime.

By subscribing you agree to our privacy policy.