Expert Insights: A Deep Dive Into Advancements in AI Dubbing with Engineering Leader

Reach Global Audiences with 3Play’s AI Dubbing Solution

In recent years, artificial intelligence (AI) has made significant strides in various industries, and the dubbing industry is no exception. The allure of AI dubbing systems is their promise of speed, efficiency, and cost-effectiveness. However, despite these advancements, AI-only solutions often fall short in delivering the quality and nuance that human-in-the-loop (HITL) dubbing systems can achieve.

In this blog post, we explore advancements in AI dubbing and the distinction between AI-only and HITL dubbing processes with insights from an SVP of Engineering, Dan Caddigan.

Exploring the Evolution of Advancements in AI Dubbing

Meet the Expert

Dan Caddigan, SVP of Engineering at 3Play Media, brings 20+ years of technology leadership to the forefront of AI dubbing. His deep understanding of generative AI and its applications continues to advance AI dubbing technologies, reshaping the landscape of media localization.

At first glance, AI-generated dubs seem like a magical solution, offering a seamless, automated process. AI voices can sometimes be so realistic that it’s hard to tell them apart from human voices – a testament to how far the technology has come.

However, a closer examination reveals that these fully automated systems often lack the quality required for professional applications. As Caddigan puts it, “AI has made significant strides in the dubbing industry, becoming an essential tool for media production. However, given the number of moving pieces in the dubbing process, fully automated dubs have various categories of quality issues.”

The dubbing industry is evolving rapidly with advancements in AI technology, offering ever-expanding options. Self-service AI tools enable users to handle their dubbing needs independently but fully relying on AI from end-to-end can lead to significant limitations throughout the dubbing process.

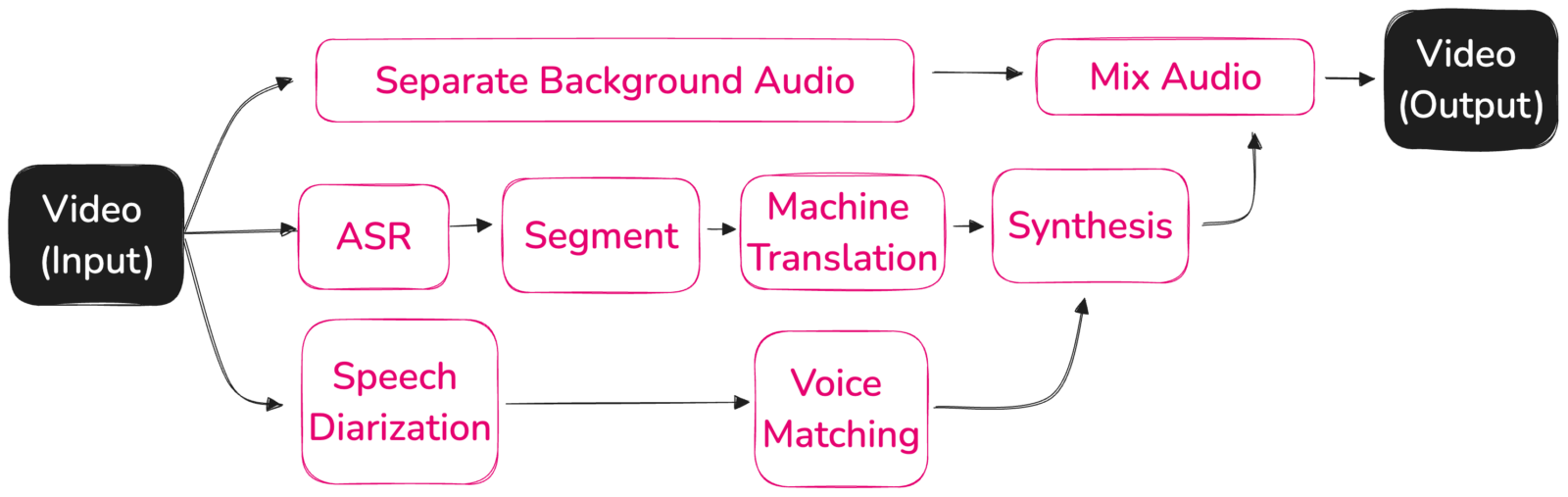

To understand these limitations and the benefits of incorporating human oversight, let’s dive into the current state of technology throughout the dynamic AI dubbing process.

From Automation to Collaboration: AI-Only vs. Human-in-the-Loop Dubbing

To understand the limitations of AI-only dubbing and the benefits of human-in-the-loop, let’s dive into the current state of AI dubbing technology within each step of the process.

Automated Speech Recognition (ASR)

The first step in a fully automated dubbing process is transcription through Automated Speech Recognition (ASR), which converts spoken language into text. Despite advancements, ASR technology is still far from perfect. Caddigan explains, “ASR is really only effective in a limited environment – single speaker, limited background noise, good audio quality and connection.” However, when the above challenges are introduced, “ASR quality falls apart quickly.”

Even the most accurate ASR engines have an average Word Error Rate (WER) of around 7.5%, and any given media transcript has a 1 in 5 chance of scoring 10% or worse in WER. This inaccuracy can significantly affect the quality of the final dub. Caddigan says, “If you’re going with full AI dubbing without [human] edits, you should expect the end result to have lower accuracy.”

To address this, there are a few options:

- Accept the poor-quality source: This will significantly affect the final dub’s quality and negatively impact the viewing experience and accessibility.

- Manually fix it: Editing and correcting multiple ASR transcripts can be time-consuming, especially when dubbing into many languages.

- Utilize a human-in-the-loop dubbing service: As part of the service, humans conduct quality checks on the initial ASR transcript, ensuring a more accurate starting point.

Whether you are editing the transcript yourself or enlisting a HITL service, it’s important to ensure that the initial transcription is 99%+ accurate to guarantee a solid foundation for the rest of the dubbing process.

Read the 2024 State of ASR Report

Machine Translation (MT)

Once the ASR transcript is generated, the dubbing process continues on to translation. If the initial transcript was created using only ASR and was not cleaned up, using machine translation (MT) will only compound the initial errors. “Errors in an ASR transcript multiply with machine translation. Even perfect source language can introduce inaccuracies, and initial errors will amplify in the translated output,” says Caddigan.

Recently, we’ve seen generative AI models rising on the machine translation leaderboards. This introduces a new challenge to dubbing workflows – hallucinations – instances that create nonsensical outputs. Caddigan explains, “Generative models are simply predicting the next token (words), given what it’s already seen. Most of the time, this produces an accurate result. Sometimes, though, it spits out gibberish, so it’s always wise to have a human review generative results.”

Machine translation also struggles with regional terminology and brand-specific tone and language. Furthermore, the translated text must match the timing of the original speech, a task current MT models cannot accurately perform.

Caddigan notes, “Current Machine Translation models are not capable of producing translations that synthesize to a specific duration,” resulting in translations that are too long or too short. We’ll talk more about this challenge in the next section.

Synchronizing Dubbing with Speech

Timing is critical in dubbing and ultimately impacts the overall viewing experience. The translated text must match the timing of the speaker’s mouth movements as closely as possible, otherwise, it won’t be enjoyable to watch. For example, if the speaker talks for 2 seconds but the machine translation creates a 3-second translation, the timing will be off and the viewer will notice.

With a human involved, they can intervene and re-translate to better match the timing of the original audio. Caddigan highlights, “At 3Play, we have tools that help us resynthesize segments to ensure proper timing alignment, but AI-only systems only do one pass” which significantly impacts synchronization results.

Direct translations from English to other languages can result in a 20-30% increase in spoken content (when translating English to German or Spanish for example). AI-only dubs either need to speed the content up to unnatural levels, force a misalignment, or some combination of both. However, with a HITL solution, the segments get re-worded to preserve alignment and tempo.

Speech Synthesis

Speech synthesis technology has seen significant advancements, producing high-quality outcomes most of the time. However, there are limitations when using synthesized speech technology. Caddigan explains, “Some percentage of segments will sound unnatural due to the nature of some generative AI models.”

These language models may also mispronounce words, requiring phonetic spelling adjustments to achieve proper pronunciation. Caddigan emphasizes, “You should expect some number of ‘synthesis errors’ after an AI-only dubbing run, based on the length of content. Each speaking segment has some probability of unnatural, garbled, or mispronounced speech. Multiply that out by the length of your content and that’s how many errors you’ll have – it’s just math.”

In a self-serve tool, for instance, users must review each segment of speech and rerun the AI speech model as needed. In contrast, a HITL dubbing solution ensures that someone is overseeing the quality of the synthesized speech outcome, meaning you don’t have to conduct quality checks yourself (saving you time).

Voice Matching

Voice matching is a new technique in AI dubbing where speakers’ voices are matched against a large database of voices, making it seem as if their voice was cloned. In an AI-only system, the AI must guess how many speakers there are and precisely at what times they’re speaking. It then “matches” their voice from these audio samples. Currently, this process is highly error-prone as AI models are not good at speaker diarization – an automatic process that segments an audio recording into distinct sections based on the identity of each speaker.

This leads to several pitfalls.

Caddigan explains, “If the model identifies the wrong number of speakers, it will blend voices and two speakers will be assigned the same voice.” This will confuse the viewer in the result – especially if two of the blended speakers are speaking to each other.

3Play’s HITL dubbing process addresses this by speaker-labeling every speaker during the initial transcription. This ensures accurate speaker identification and clean audio clips during the voice-matching step.

Background Audio Extraction

Last, but certainly not least, let’s discuss another important step in the AI dubbing process – background audio extraction. Background audio extraction models have gotten quite good, but still produce noticeable artifacts at times (incorrectly identifying background audio as voice or vice versa). To ensure maximal quality, the best approach is still to provide clean speaker tracks without background audio and mixing in the background audio post-dubbing.

In reality, not everyone is looking for the same end result or has the same inputs for dubbing. Caddigan emphasizes the importance of flexible workflow options in a HITL dubbing service that meets people where they are and considers their unique needs.

Have separate audio tracks? Only have the combined source video? It’s crucial to find an AI dubbing solution that will take whatever inputs you have to ensure the best quality result.

Looking to the Future of AI Dubbing

The advancements in AI have undoubtedly revolutionized the dubbing industry, providing tools that can significantly streamline the process. However, the limitations of AI-only solutions highlight the necessity of human involvement to ensure high-quality results.

As Caddigan puts it, “You shouldn’t expect the first time you do an AI-only dub to be ‘right’. There are just way too many variables. AI will get you close and lead to faster turnaround times, but you’ll need a human touch to smooth over AI’s shortcomings.”

As the dubbing industry continues to evolve, the advancements in AI dubbing have brought significant improvements, particularly when combined with human oversight. AI is a powerful tool, but it works best in collaboration with humans, ensuring that the final product provides the quality that viewers want.

The 3Play (Dubbing) Way: Combining AI with Human Precision

In the evolving landscape of AI dubbing, maintaining high-quality standards is crucial. The advancements of AI dubbing are undeniable, but as we’ve seen, combining AI with human expertise is essential for achieving the best results.

At 3Play Media, our human-in-the-loop (HITL) process ensures that while AI handles much of the work, human experts oversee the whole process and final output to guarantee accuracy and naturalness.

As Caddigan highlights, “The HITL process is essential for delivering the quality our customers expect. It’s our unique blend of AI, use case-specific tooling, flexible workflows and human expertise that sets us apart.”

By leveraging cutting-edge AI technology alongside experienced professionals, 3Play Media provides a robust, flexible dubbing solution that meets diverse needs.

Note: The landscape of AI dubbing is evolving at a rapid pace, and while we strive to keep this information as current as possible, some details may change as the technology advances. We encourage readers to stay informed on the latest developments and revisit this blog for updates.