What Is Closed Captioning?

Updated: September 1, 2023

Read the Beginner’s Guide to Captions [Free eBook]

Closed captioning is time-synchronized text that reflects an audio track and can be read while watching visual content. The process of closed captioning involves transcribing the audio to text, dividing that text into chunks known as “caption frames,” and then synchronizing the caption frames with the video. When watching a video, closed captioning typically appears in the bottom center of the video.

Watch a quick video or keep reading below to learn everything you need to know about the essential question: what is closed captioning?

In order to be considered fully accessible, captions should assume that the viewer cannot hear the audio at all. This means closed captions should include not only a transcription of spoken dialogue, but also any relevant sound effects, speaker identifications, or other non-speech elements that would impact a viewer’s understanding of the content. The inclusion of these elements make closed captions significantly different from subtitles, which assume that the viewer can hear but cannot understand the language – meaning subtitles primarily serve to translate spoken dialogue.

How do you know which sound effects, speakers IDs, or non-speech elements are relevant enough to caption? Think about it this way: closed captions should account for any sound that is not visually apparent, but is integral to the plot. For example, the sound of keys jingling should be denoted in captions if it’s important to the plot development – like in the case that a person is standing behind a locked door. However, it’s not important to denote this sound if the keys are jingling in someone’s pocket as they walk down the street.

Origin of Closed Captions

Closed captions originated in the early 1980s as the result of an FCC mandate that gradually became a requirement for most programming on broadcast television. Accessibility laws have evolved to require closed captions for Internet video, and captions have become widely available across various devices and media. Originally, captions were created as an accommodation for those who are d/Deaf or hard of hearing, however, studies have since shown that the majority of people who regularly use closed captions do not experience hearing loss.

The popular, more “mainstream” use of captions is largely due to their capability for improving user comprehension and removing language barriers, particularly for those who speak English as a second language. Captions can also help compensate for poor audio quality or a noisy background, which allows users to consume your content in sound-sensitive environments (like the workplace, a library, or the gym).

Closed Captioning Terminology

Captions vs. Transcripts

The primary difference between captions and a transcript is that a transcript is not synchronized with the media. Transcripts are sufficient for audio-only content like a podcast, but captions are required for videos or presentations that include visual content along with a voice-over.

Closed Captions vs. Open Captions

Closed captions allow the viewer to toggle the captions on and off, giving them control of whether captions are visible. On the other hand, open captions are burned into the video and cannot be turned off. Closed captions are more common with online video, while open captions can be found on kiosks. See a demonstration of open versus closed captions.

Pre-Recorded vs. Live Captions

Pre-recorded captions (“offline captions”) versus live captions (“real-time captions”) refers to the timing of when the captioning process is done. Live captioning is produced and transmitted in real time by expert stenographers while the live event is happening. Pre-recorded captions are produced after the event has taken place, and typically attached to a video recording of the event.

Closed Captions vs. Subtitles

While these terms are used interchangeably abroad, in the United States there is a significant difference between the three. Closed captions are designed for viewers who are d/Deaf or hard of hearing, which means they should include sound effects, speaker IDs, and other non-speech elements. By comparison, subtitles are intended for viewers who do not speak the content’s source language. Because of this distinction, American subtitles only communicate spoken content.

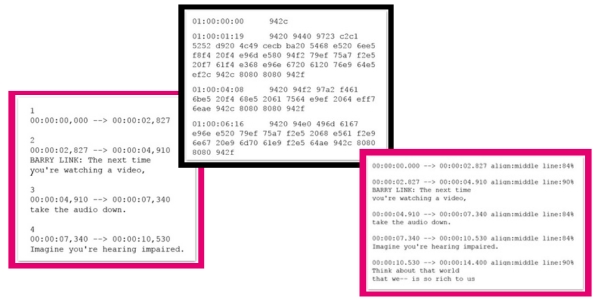

Closed Caption Formats

There are many different caption file formats that are used depending on the type of media player or device you’re using – browse a list below of some common caption formats and their respective applications.

| Format Type | Use Cases |

|---|---|

| SCC | Broadcast, iOS, web media |

| SMPTE-TT | Web media |

| CAP | Broadcast |

| EBU.STL | PAL Broadcast |

| DFXP | Flash players |

| SRT | YouTube and web media |

| WebVTT | HTML5 |

| SAMI | Microsoft / Windows Media |

| QT | QuickText / QuickTime |

| STL | DVD encoding |

| CPT.XML | Captionate |

| RT | Real Media |

SRT (SubRip Subtitles) files are one of the most popularly used and widely accepted closed captioning file formats – and you can create them yourself! Check out our complete guide to SRT files for more information on the critical elements that make up and SRT file and how you can write them on your own computer.

This blog was originally published by Tole Khesin on January 15, 2015 and has since been updated for comprehensiveness, clarity, and accuracy.